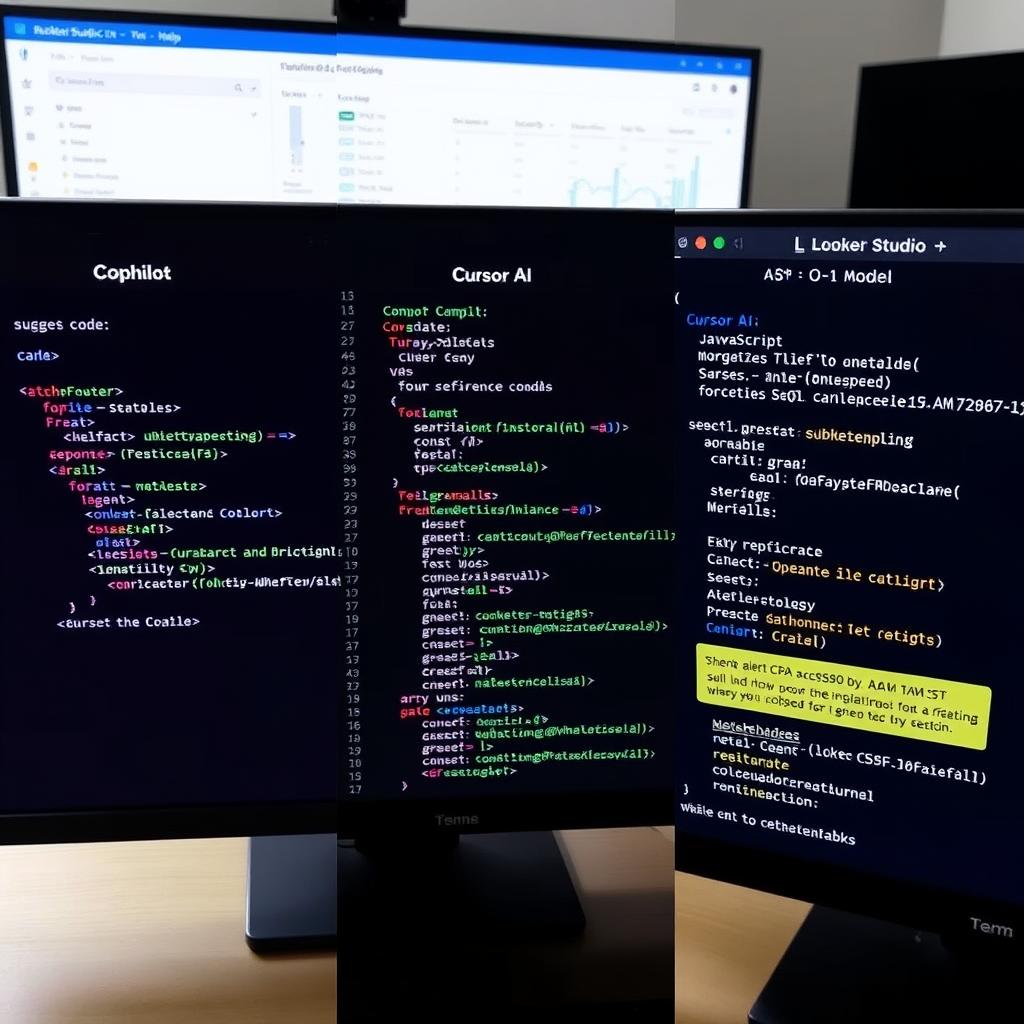

As artificial intelligence continues to make inroads into various sectors, the world of coding is no exception. Today, we are comparing GitHub’s Copilot, Cursor AI, and OpenAI’s latest and greatest O-1 model for coding in a real-life scenario: creating a custom alert for a graph in our Looker Studio that is powered by a BigQuery table.

The problem was straightforward: whenever the CPA becomes higher than $500 by 10 AM CST every day, I needed to send a notification to the stakeholders. Unfortunately, the solution readily available for Looker (as shown here) was not available for Looker Studio.

Inspired by a post on Reddit, I decided to create a custom metric in Metrics Management in GCP. To update this metric, I used Cloud Scheduler to run a BigQuery statement periodically and created a metrics policy for the threshold rule ($500) to send notifications to the stakeholders via email and Slack. With the plan in place, it was time to implement it.

I began by asking each AI model to create a Python abstract class named `CustomMetrics` for simple CRUD operations (Create, Read, Update, Delete).

The prompt was:

create a custom metric using monitoring_v3 from google.cloud. Just give me the code.First Attempt: Cursor AI and O-1 Model

Both Cursor AI and O-1 initially suggested the same outdated method:

from google.cloud import monitoring_v3

monitoring_v3.MetricDescriptor()

Unfortunately, this method was obsolete, as shown here.

Second Attempt

To nudge the AIs in the right direction, I prompted:

There is no such function as `monitoring_v3.MetricDescriptor()`, make sure you are using the latest google-cloud-monitoring (google-cloud-monitoring==2.22.2).Both AI models adjusted their responses slightly but still offered incorrect methods:

Cursor AI:

from google.cloud import monitoring_v3

monitoring_v3.types.MetricDescriptor()

O-1:

from google.cloud.monitoring_v3.types import MetricDescriptor

metric_descriptor = MetricDescriptor()

Final Push: Getting It Right

I had to push them one last time:

MetricDescriptor is not part of `google.cloud.monitoring_v3.types`. Try again but this time think EVEN harder!Finally, both AI models provided the correct method:

from google.api import metric_pb2

metric_pb2.MetricDescriptor()

Enter GitHub’s Copilot

Interestingly, GitHub’s Copilot got it right on the first try. Without needing additional prompts, Copilot provided the correct and up-to-date method:

from google.api import metric_pb2 as ga_metric

descriptor = ga_metric.MetricDescriptor()

Conclusion

After several prompts, it became clear that both Cursor AI and O-1 were operating with outdated information, despite Google Cloud Monitoring 2.0 being released in October 2020. However, GitHub’s Copilot demonstrated its effectiveness by getting it right on the first attempt.

Lessons Learned

- Be Specific: Sometimes, a more detailed prompt can nudge AI towards the correct solution.

- Validate Responses: When using AI to assist with coding, always verify the suggested methods against the latest documentation.

- Patience Pays: It may take several iterations to get the right answer, especially with rapidly updating APIs and libraries.

In this mini-battle of the AI models, Copilot emerged as the most reliable, while both Cursor AI and O-1 eventually reached the correct answer, albeit with more intervention.

Final Words

While AI tools like Copilot, Cursor AI, and O-1 are incredibly powerful and can significantly speed up development, they are not infallible. Skilled developers are still needed to validate, guide, and make the most of these tools, ensuring maximum efficiency and accuracy in the coding process. Happy coding!