How I got a perfect score on Google’s PageSpeed Insights

Today, I am going to show you how i took a website having 47 Google’s PageSpeed Insights score and make it up to 98. Yes, you heard me right, ninety friggin eight almost full score! Now, I will share all the details so you can just do it without spending hours, yes literally hours unlike me.

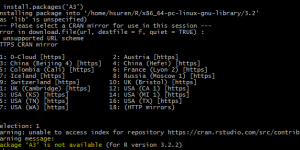

First let me tell you about my server specs. I am hosting my website on Amazon (AWS) EC2 and I have Red Hat 4.8.3-9 operating system installed with Apache/2.4.23. All right, ready to go? Let’s the show begin…

Optimize Images (Image Compression)

First I have optimized all the images on my server. In order to save from disk space as well as the bandwidth, it is a perfect candidate to start with. To do that, I have used two different image optimization executable on Linux that allows lossless compression (file size reduction). The first one is optipng and the other is jpegoptim

find -name '*.png' -print0 | xargs -0 optipng -nc -nb -o7

The above code recursively traverse your current directory and find all the PNG files and makes sure no color / brightness / saturation changes using the best compression algorithm. You have to be careful using this, because it is not reversible, in other words, it overwrites the images.

Let’s do this for all the JPG files now similarly…

find . -name "*.jpg" | xargs jpegoptim

All done… As far as I know both tools allow preserving original images using a flag. I will let you discover that…

Leverage browser caching

Next is adding caching headers to your files so that browsers can cache your content client-side. In order to do that, I have created a configuration file under /etc/httpd/conf.d/perf.conf so that Apache can read. And then inserted the following lines. Do not forget the location of this file, we will come back and add some more stuff soon.

<IfModule mod_expires.c> ExpiresActive On ExpiresByType image/jpg "access 1 year" ExpiresByType image/jpeg "access 1 year" ExpiresByType image/gif "access 1 year" ExpiresByType image/png "access 1 year" ExpiresByType text/css "access 1 month" ExpiresByType text/html "access 1 month" ExpiresByType application/pdf "access 1 month" ExpiresByType text/x-javascript "access 1 month" ExpiresByType application/x-shockwave-flash "access 1 month" ExpiresByType image/x-icon "access 1 year" ExpiresDefault "access 1 month" </IfModule>

Basically, it is to tell Apache to add `Cache-Control` line in the header of the files. You can define any duration (period) you want specific to each file type as shown above.

Minify JavaScript, Minify CSS and Minify HTML

Remember I told you, do not forget the location of the configuration file? It is time to re-visit that file for minifying JS, CSS and HTML.

So, I have two piece of code for this section, the first one is to prevent double gzip (compression) and only provide the gzip version of the file if/when client browser supports it and serving gzip compressed CSS and JS file if they are available on the server. Believe me, it is a TON of a bandwidth saving.

<IfModule mod_headers.c>

# Serve gzip compressed CSS files if they exist

# and the client accepts gzip.

RewriteCond "%{HTTP:Accept-encoding}" "gzip"

RewriteCond "%{REQUEST_FILENAME}\.gz" -s

RewriteRule "^(.*)\.css" "$1\.css\.gz" [QSA]

# Serve gzip compressed JS files if they exist

# and the client accepts gzip.

RewriteCond "%{HTTP:Accept-encoding}" "gzip"

RewriteCond "%{REQUEST_FILENAME}\.gz" -s

RewriteRule "^(.*)\.js" "$1\.js\.gz" [QSA]

# Serve correct content types, and prevent mod_deflate double gzip.

RewriteRule "\.css\.gz$" "-" [T=text/css,E=no-gzip:1]

RewriteRule "\.js\.gz$" "-" [T=text/javascript,E=no-gzip:1]

<FilesMatch "(\.js\.gz|\.css\.gz)$">

# Serve correct encoding type.

Header append Content-Encoding gzip

# Force proxies to cache gzipped &

# non-gzipped css/js files separately.

Header append Vary Accept-Encoding

</FilesMatch>

</IfModule>

The second bit of code is to compress all the files ending with .js, .css and .html.

<filesMatch "\.(js|html|css)$">

SetOutputFilter DEFLATE

</filesMatch>

Eliminate render-blocking JavaScript and CSS

This is the killer. We will use Google’s ModPageSpeed module to take care of everything that makes rendering slow. Some people say ModPageSpeed is just enough it is own to boost up the performance 50% or more. OK, here is the code.

ModPageSpeed on ModPagespeedRewriteLevel CoreFilters ModPagespeedEnableFilters prioritize_critical_css ModPagespeedEnableFilters defer_javascript ModPagespeedEnableFilters sprite_images ModPagespeedEnableFilters convert_png_to_jpeg,convert_jpeg_to_webp ModPagespeedEnableFilters collapse_whitespace,remove_comments ModPagespeedEnableFilters make_google_analytics_async ModPagespeedEnableFilters inline_google_font_css

In case you do not have ModPageSpeed installed on your server, you can simply download and install it here. It is plug-n-play, after installing it, it will be configured automatically.

If you have come to this point, I can guarantee that you have already boosted up the performance 75% more. Let me know how it did for you by commenting below. I read and reply ALL the comments.

Hi, interesting to see that Google’s ModPageSpeed (v.1.11.33.4) can’t do a perfect job. You wrote this article on 2016-11-23 and then updated on 2016-12-08. Maybe you changed something cause right now your scores on Google PageSpeed Insights are low, 68/100 on mobile and 87/100 on Desktop.

“Eliminate render-blocking JavaScript and CSS in above-the-fold content” warning still present.

“Leverage browser caching” warning on resources out of your control, facebook, twitter and google javascript, as usual.

“Prioritize visible content” warning on mobile, that’s where Google’s ModPageSpeed fails.

“Reduce server response time” warning seems to indicate you use no cache plugin, serving static content will easily fix that.

You are on Amazon server, no HTTP/2 support, but they are fast, still Pingom Website SPeed Test scores C72, load time 4.15s, GTmetrix PageSpeed B86, YSlow D65, load time 3.9s, and with webpagtest.org the ttfb is too high, 918ms.

Google affirms that its tool cannot guarantee perfect results, you prove that live.

Sorry for that.

I can easily help you get higher scores, without using the Google Module, if you are interested in. With a definitive solution: manual generated Critical CSS.

I sincerely appreciate you report and admire the efforts you put into optimization, knowing how hard this topic is and what is the real solution to get the maximum scores.

Hi Paul,

Thanks for taking time reading and reviewing. Yes I fully agree that Google’s ModPageSpeed doesn’t guarantee perfect result all alone (as I mentioned in my article as well). Honestly, I don’t seek any more performance boost than what I currently have right now but I am really interested in seeing some of the websites you optimized. Maybe we can get in touch via Twitter and have a chat more about this topic. You can find me @haktansuren.

Re: Performance drop since I updated the article was majorly due to the fact that I had to remove “ModPagespeedEnableFilters defer_javascript” line as it screwed up some plugins functionality I use. I will update the article accordingly as soon as I find some time. Once again, thanks for your input and suggestions!

Hi, I have a question, Found your post very usefull.

But where to add the mod_pagespeed script? on the apache perf.conf file?

Hi Marcelo,

You can technically add it into any .conf file. I just create new file under /etc/httpd/conf.d/ and add it there. Apache’s main conf automatically reads this directory.

In my case, For my client website, i write it in /etc/apache2/sites-available/myclient.example.com.

This article also perfect for multiple domain & subdomain all in one dedicated IP/ VPS. I’m very happy.

I want to implement it at global configuration (apache2.conf). But i’m afraid to do that in my current server. May be I’ll give a try at other server.

Thanks a lot Haktan Suren for this great article

Thanks for your feedback Catur, I am glad it was helpful for you 🙂

Thank You. It’s work for my client website.

I try several explanation from other website but didn’t work.

Please write more articles like this.

Good read!

[…] previously mentioned that I am using PageSpeed Insights of Google to speed up my site. Hands down it is a great Apache module but you should be extra […]

Great Share!! But why in GA4 the data isn’t accurate.

The reason is that they cannot accurately perform page-to-page tracking. For Google Analytics, holistic tracking takes precedence over event-based tracking. They are not overly concerned about missing data.